AI is evolving fast and so are the ways we need to prepare data for it. In our previous blog, Preparing Your Data for Agentic AI in 2 Minutes, we covered the basics of getting started and it sparked a lot of great questions from readers asking for more detail. As a response, we’ve written this deeper dive into the differences between preparing data for generative AI versus agentic AI.

Most teams are now comfortable with what it takes to train generative AI: large, static datasets that help models learn patterns to produce text, images, or other content. As agentic AI gains traction, however, a new challenge is emerging. These systems don’t just generate content, they make decisions, take actions and adapt to changing environments. That means the data they need looks very different.

In this post, we’ll unpack the key differences between data prep for generative and agentic AI, explain why it matters and share what teams should consider as they move toward more autonomous systems.

Generative AI vs. Agentic AI

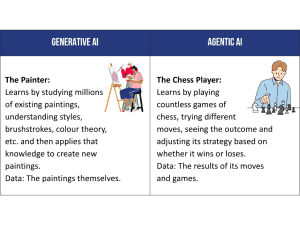

Generative AI is designed to create content, whether that’s text, images, code, or music, based on patterns it has learned from large datasets. These systems are powerful at mimicking human-like output by predicting what comes next in a sequence, but they don’t have goals or awareness of the outcomes of their responses. They react to prompts, they don’t plan or act with intent.

Agentic AI, by contrast, is built for action. These systems don’t just respond; they operate with a degree of autonomy to pursue goals, make decisions and interact with environments. Think of an AI assistant that can book meetings across calendars, execute multi-step tasks, or control a robot in the physical world. It’s not just about knowing what to say or do, it’s about choosing what to do next, based on feedback, context and a desired outcome.

To illustrate these differences, the below is a simple analogy that I feel explains this well.

While both types of AI may use similar architectures under the hood, the way they operate and the kind of data they require is fundamentally different. The next sections explore why that difference matters.

The Role of Data in Each

Data is essential to both generative and agentic AI, but how it’s used and what it needs to include is very different.

For generative AI, data is about learning patterns. These models are trained on large, static datasets like text, images, or code to generate new content that mimics the style and structure of what they’ve seen. It’s all about scale and diversity, the more high-quality examples you feed in, the better the model gets at producing convincing outputs. The data doesn’t change or interact, it’s just rich material to learn from.

Agentic AI, on the other hand, needs data that supports action and decision-making. These systems aren’t just generating something, they’re trying to do something and most often, in a changing environment. That means the data must include context, goals, possible actions and feedback. It’s less about static examples and more about helping the AI understand how to move through a process, adjust to outcomes and pursue objectives.

Effectively, generative AI learns from content while agentic AI learns from experience and that changes how you prepare the data behind them.

|

Generative AI |

Agentic AI |

|

|

How it learns |

Generative AI models (like Large Language Models for text, or image generation models like DALL-E) are trained on vast datasets of existing content (text, images, audio, code). |

Agentic AI is designed to interact with an environment, make decisions, take actions and learn from the outcomes of those actions. This often involves techniques like reinforcement learning, where the agent receives rewards or penalties based on its performance in a dynamic environment. |

|

What it learns |

It learns the patterns, structures, styles and relationships within that content. Its goal is to understand how that content is composed so it can generate new, similar content. | It learns effective strategies, decision-making processes and how to adapt its behaviour to achieve specific goals in a changing environment. It learns how to act and what actions lead to desired results. |

|

Data preparation |

This typically involves collecting and cleaning massive amounts of static data (documents, images, audio files). The focus is on the quality and diversity of the content itself. For example, training an LLM requires a huge corpus of text, while an image generator needs millions of images with descriptive captions. |

This is less about preparing static “content” and more about designing the environment, defining rewards/penalties and simulating or observing interactions. The “data” it learns from is the stream of observations, actions and feedback from its interactions with the world (real or simulated). For example, a self-driving car AI agent learns from driving simulations and real-world driving data (sensor inputs, human corrections, outcomes of decisions). |

Key Differences in Data Preparation

While both generative and agentic AI fall under the same broad category, they rely on very different kinds of data. Generative AI learns by studying large, diverse libraries of examples to replicate patterns in things like text, images or code. Agentic AI on the other hand, requires a more interactive setup. It needs data that supports goal-directed decision-making, experimentation and continuous adaptation over time.

Below are the key differences in their data preparation:

- Goal-Oriented Data: Agents need to know why they’re acting. Data should include goals, success criteria and enough context to support smart decision-making — not just examples to copy.

- Environment Simulation: Agents operate within environments, so they need data that models the world around them i.e. possible actions, state changes and outcomes. It’s more like building a sandbox than a dataset.

- Temporal and Sequential Data: Since agents act over time, they need to understand how things evolve. Static snapshots won’t cut it, they need sequences, transitions and long-term cause and effect.

- Dynamic and Real-Time Data: Unlike models trained once on fixed data, agents often rely on live or constantly updating inputs. Their data needs to reflect a changing world.

- Feedback Loops: Agents learn by doing. That means capturing the results of their actions and feeding that information back into the system to support ongoing learning and adaptation.

Why Agentic Data Is Harder Than It Looks

While preparing data for agentic AI shares some surface similarities with traditional AI workflows, it introduces a set of deeper, more complex challenges. Agents are expected to act autonomously, learn through interaction and adapt to changing circumstances. As a result, the data must do much more than provide static examples. It needs to actively support reasoning, exploration and learning over time, often under conditions of uncertainty and delayed feedback.

Here are some of the key challenges that make preparing data for agentic AI fundamentally different:

Context Sensitivity

Agents must “understand” evolving contexts, meaning you may need to model the world, not just facts.

In agentic AI, the environment isn’t static, it evolves based on the agent’s actions and external factors. Data must therefore support situational awareness, including evolving parameters, hidden variables and contextual nuances. It’s not enough to provide isolated facts; agents need datasets that show how context changes over time and how those changes should influence decision-making. This often means designing richer, multi-layered datasets or incorporating environment simulators alongside static data.

Exploration vs Exploitation

Data must allow for discovery, not just execution.

Unlike traditional models that only optimise for the best-known outcomes, agents must explore new possibilities to improve performance over time. This tension between exploring unknown options and exploiting known strategies requires data that doesn’t just highlight “the right answer,” but encourages risk-taking, hypothesis testing and learning from imperfect information. In data terms, it means including uncertain, incomplete, or ambiguous scenarios, not just perfectly labelled ones.

Sparse Rewards

Real-world feedback may be rare, noisy, or delayed, so training data must account for long-term outcomes.

In real-world environments, clear feedback (“reward signals”) is often delayed, rare, or noisy. For example, a robot may only know it succeeded in its mission hours after starting it. This creates a challenge: the agent must learn which earlier actions contributed to later success or failure. Data preparation must reflect this by capturing long chains of actions and results, not just immediate cause-and-effect. It may also require simulating or engineering synthetic rewards during training to guide learning when real-world feedback is too sparse.

What This Means for Your Team

Moving from generative to agentic AI isn’t just a tech upgrade, it changes how teams need to think about data. Here are some practical actions your organisation can begin to undertake:

- Start with your data pipelines. Agentic systems often need real-time or event-driven data, not just big static datasets. You may need to bring in new data sources that reflect what’s happening in the moment.

- Make goals part of your data. Agents need to understand what success looks like. That means baking goals, constraints and outcome signals into your data — not just feeding examples.

- Build in feedback loops. These systems learn by doing, so you’ll need ways to capture what happens after each action and use that to inform future behaviour.

- Work cross-functionally. Since agentic data touches product, engineering and ops, it’s smart to get everyone aligned early to avoid friction later.

So, What’s the Bottom Line?

Ultimately, agentic AI requires smarter, more responsive data. If your data doesn’t reflect change, context and consequences, your agents won’t either. As AI evolves from content generation to autonomous action, the way we handle data has to evolve too. Generative AI is all about patterns and scale; agentic AI is about goals, environments and adaptability.

For teams exploring agentic systems, the key takeaway is this: your data needs to do more than inform. It needs to guide, respond and help your AI learn over time.

This shift may feel big, but it’s where the real potential lies. Start small, stay focused on the goals and build data foundations that support action, not just output.

At Nephos, we combine technical expertise and the strategic business value of traditional professional service providers to deliver innovative data solutions. We’re familiar with the range of challenges faced by organisations to effectively discovery, govern and utilise their data in various strategic projects – including AI. Find out how.